Within the quickly evolving world of augmented actuality (AR), the lab-based prototype’s present area of view stands at a moderately modest 11.7 levels. That is considerably narrower when in comparison with the choices from business leaders comparable to Magic Leap 2 and Microsoft HoloLens. Nonetheless, what it lacks in area of view, it compensates with its groundbreaking potential.

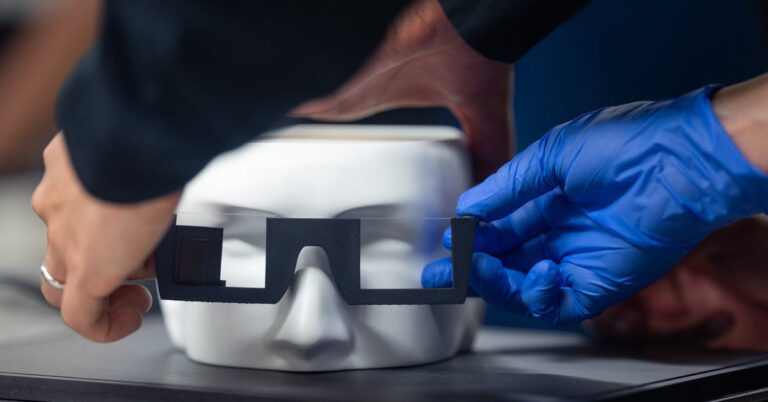

Stanford College’s Computational Imaging Laboratory is pioneering a revolutionary method with its stack of holographic parts. These parts promise to seamlessly combine into commonplace eyeglass frames. Extra impressively, they’re designed to challenge sensible, full-color, transferring 3D pictures at varied depths, providing a glimpse into the way forward for immersive AR experiences.

Leveraging waveguides, akin to different AR glasses, the Stanford researchers have launched an innovation dubbed the “nanophotonic metasurface waveguide.” This improvement heralds a big step ahead, eradicating the requirement for cumbersome collimating optics, and employs a “realized bodily waveguide mannequin.” This mannequin considerably enhances picture high quality by way of using AI algorithms, attaining computerized calibration by way of digital camera suggestions.

Whereas nonetheless in its prototype stage, Stanford’s revolutionary know-how represents a radical shift from the present spatial computing paradigm, difficult the established order of cumbersome pass-through hybrids comparable to Apple’s Imaginative and prescient Professional Actuality Headset, Meta’s Quest 3, amongst others. The transition to a extra wearables-friendly future appears imminent.

Postdoctoral researcher Gun-Yeal Lee, instrumental in authoring the examine printed in Nature, underscores the unparalleled performance and compactness of this AR system—an assertion that not solely reiterates Stanford’s dedication to pioneering next-generation AR applied sciences but additionally units a brand new benchmark within the business.